I first started shooting 360 still photography using an SLR film camera with a special pano head and stitching still frame QTVR (Quicktime VR) movies from scanned photographs. A single QTVR pano was a significant undertaking and most hardware at that time was incapable of even full screen playback. I shot my first 360 video for a pitch demo in 2013:

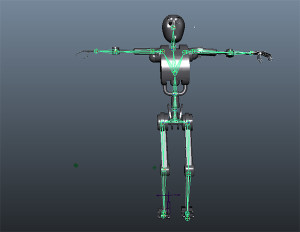

The fact that this was even possible, never-mind that it was playing on a gyroscopically controlled mobile device, was just unimaginable to me only a few years prior. Now 12 months later I’ve been experimenting with shooting 360 video in 3D for virtual reality headsets. The concept has not changed, it just now involves shooting 400 panos per second across 14 cameras.

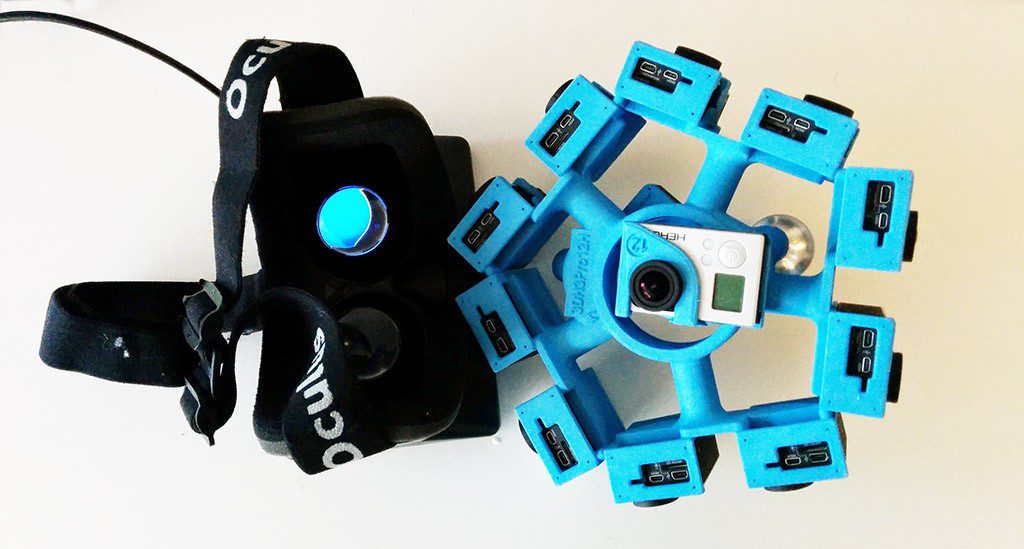

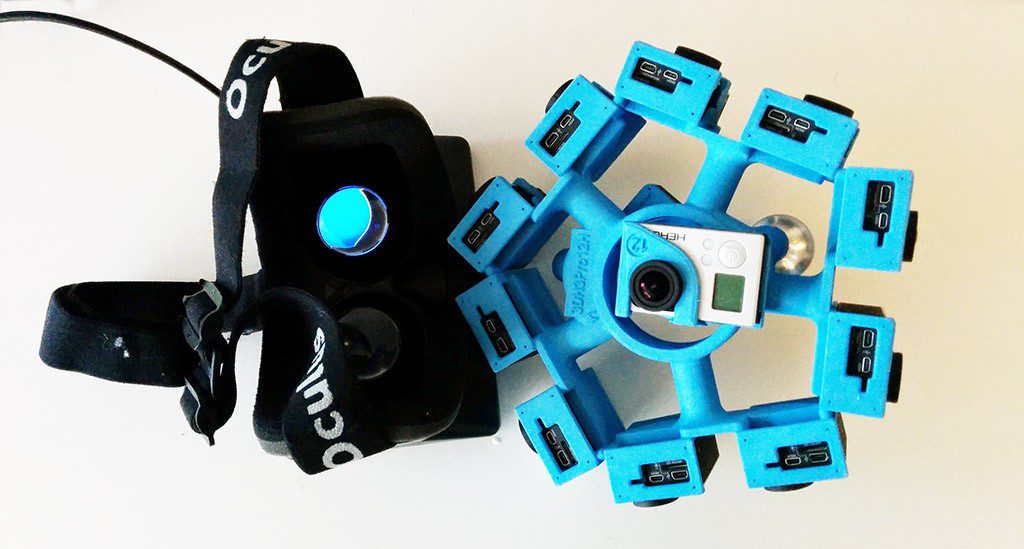

I shoot monoscopic 360 video using a six camera go pro rig, but this only goes so far in VR. It’s possible to feed a single 360 video duplicated for both the left and right eye but the effect is a very flat experience. Aside from the head tracking it is little different than waving around an iPad. To shoot 360 in stereo I needed a way to shoot two 360 videos 60mm apart (average IPD) simultaneously.

I shoot monoscopic 360 video using a six camera go pro rig, but this only goes so far in VR. It’s possible to feed a single 360 video duplicated for both the left and right eye but the effect is a very flat experience. Aside from the head tracking it is little different than waving around an iPad. To shoot 360 in stereo I needed a way to shoot two 360 videos 60mm apart (average IPD) simultaneously.

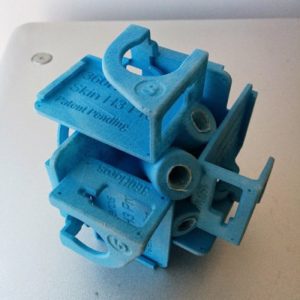

One can’t simple place two of these six camera heads side by side since each would occulude the other. The solutions so far have been to double the number of cameras with each pair offset for the left and right eye. This works, but increases the diameter of the rig which exacerbates parallax – one of the biggest issues when shooting and stitching 360 video. Parallax was enough of an issue that when shooting 3D 360 video I found it was very difficult to get a clean stitch within about 8-10 feet of the camera. Unfortunately this is the range in which 3D seems to have the most impact, but careful orientation of the cameras to minimize motion across seams does help significantly.

Read more Recording reality with 360 Stereographic VR Video