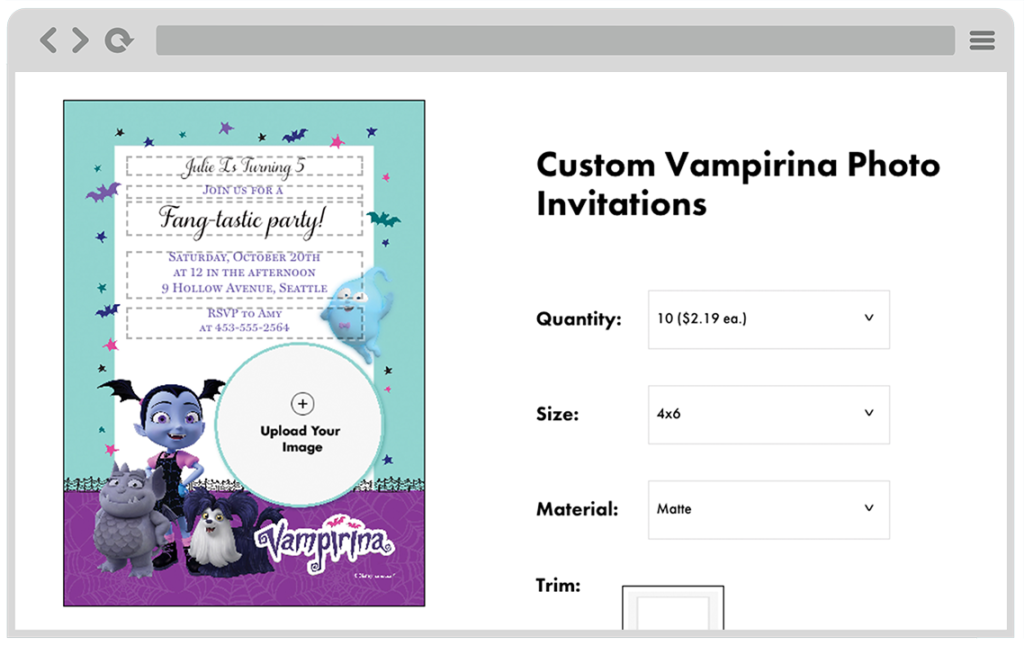

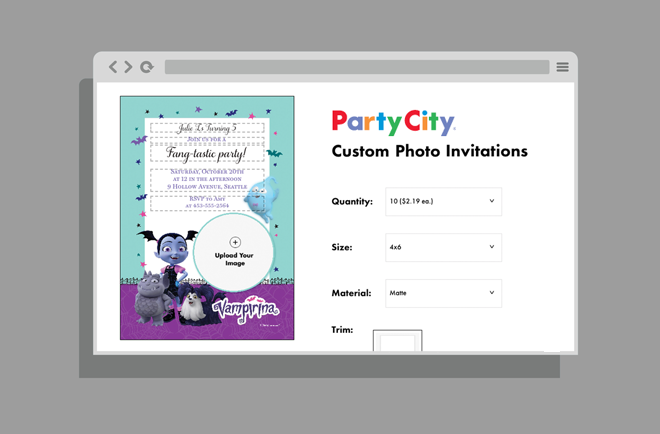

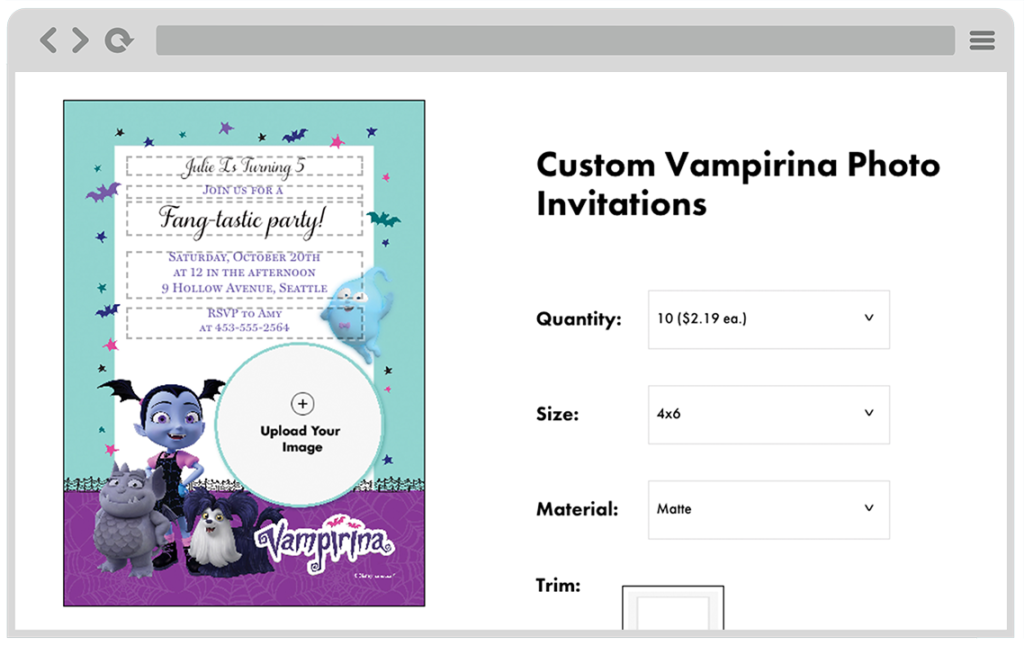

Party City had been using a 3rd party to manage its web to print production for various customized products such as invitations, banners and yard signs. In the late winter of 2019, they built out their own facility and began the process of shifting over to in-house printing in order to achieve greater profitability on these products. The job of building out a web app for customers to interface with this new system largely landed onto my lap.

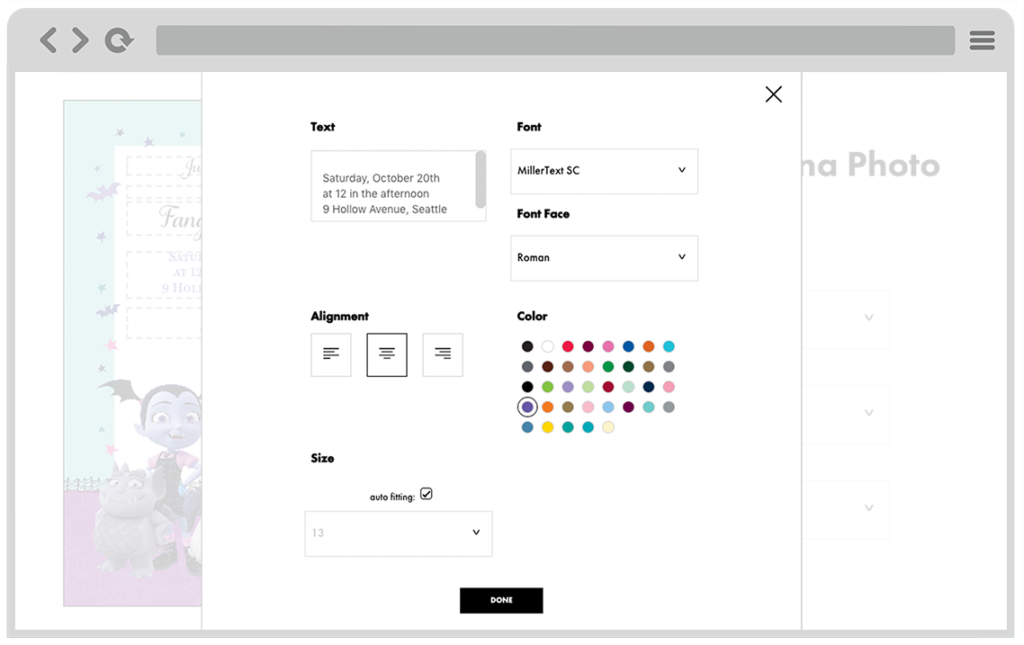

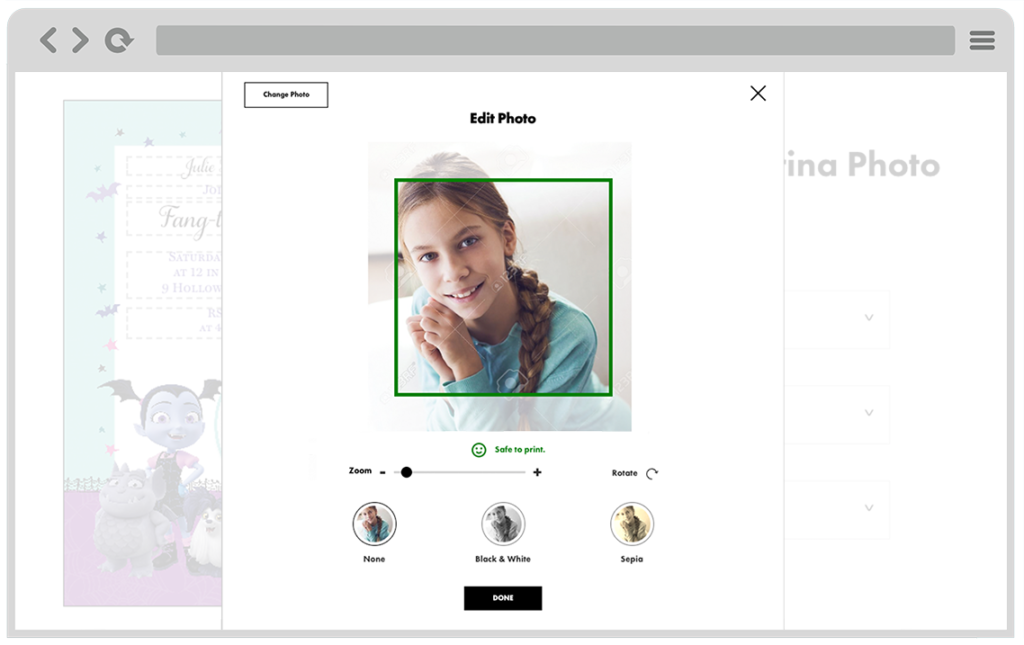

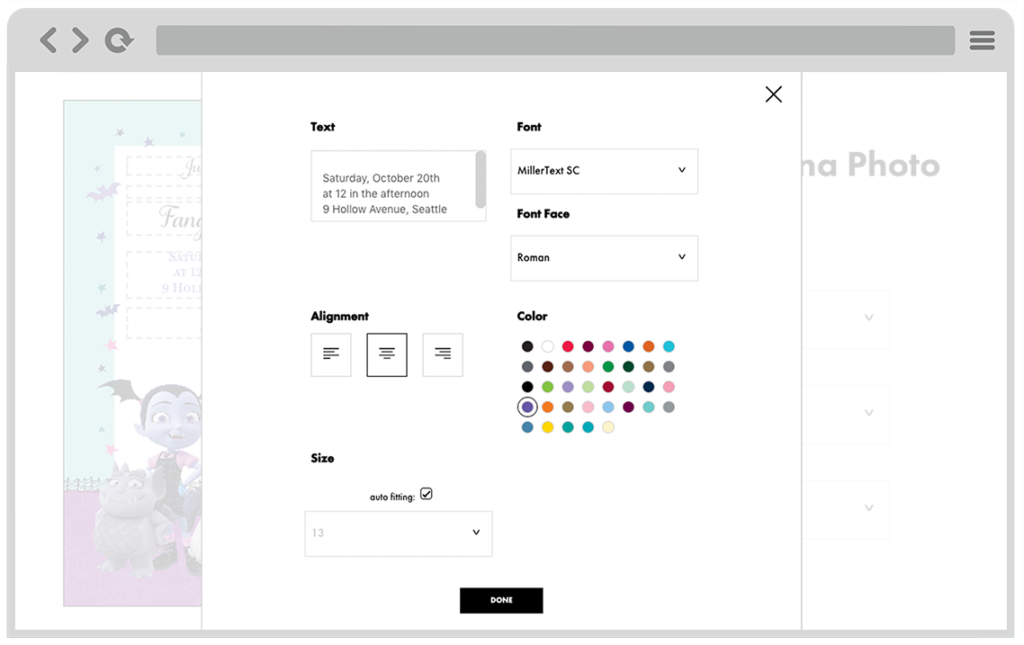

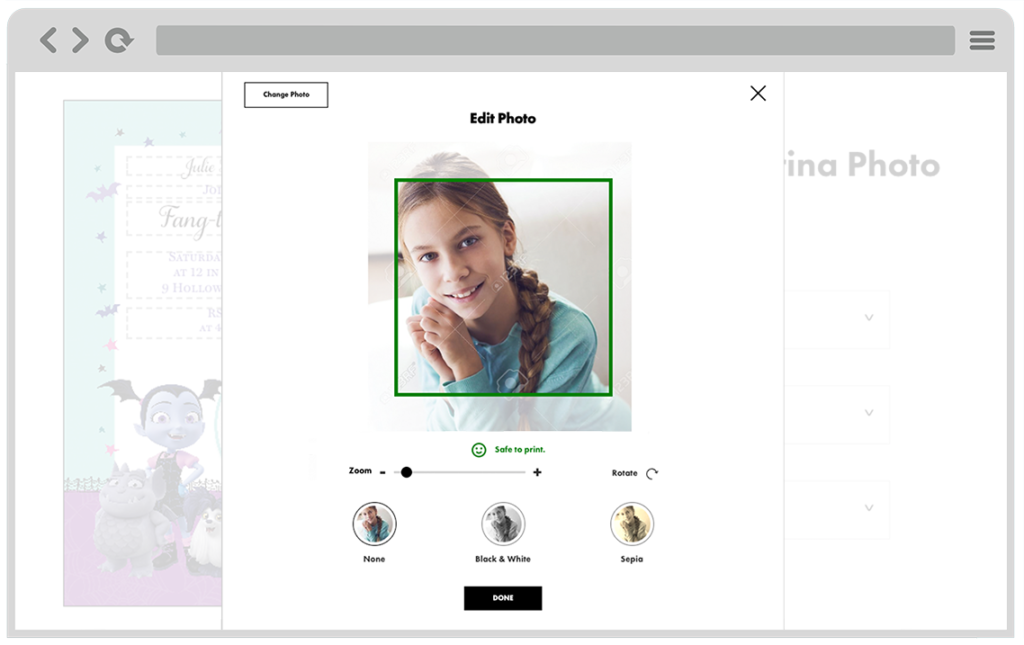

The new printers party city purchased were set up to use XMPie services which interact with documents using the XLIM format- an XML like data structure that defines the elements and layout of a document. Many thousands of these XLIM templates would be exported from InDesign and uploaded onto the XMPie server by the design teams. The web app I created was responsible for loading in these templates, allowing users to enter and edit format mapped text, manipulate and upload images, and then send everything back to the server to run proofs and final print jobs.

The app was built as a single page web app leveraging a library provided by XMPie for manipulating XLIM files called uEdit. Originally ported out of Flash, the main job of uEdit is to parse XLIM files and translate their structure accurately to HTML to be manipulated in a web browser. The library I was provided hadn’t seen an update since 2013 and came with little documentation. Learning the inner workings of XLIM required reverse engineering the single uEdit demo I could get my hands on and a lot of trial and error experimentation. It sure felt as if I was the only developer to use this product in many years. Most of its functions were designed for a very narrow point and click use case, but because our designs relied on modal dialogs, I encountered a handful of issues and shortcomings that required me to code around and sometimes directly patch the old minified uEdit source code.

I was working in parallel with multiple design teams who were pumping out new XLIM templates every day and we quickly uncovered many incompatibilities. InDesign allowed for a wide range of formating structures that were not all properly handled by uEdit. Often document properties would be applied in part to a container group and redefined on individual text boxes and so much of my dev effort was spent pre-processing the XLIM files to pull apart various nested properties and to restructure the document into a compatible format. It was a never-ending game of Wack-a-Mole, and while we attempted to set up some basic standards for the designers to follow, the burden was always on the front end code.

This was one of those projects where nothing ever seemed to work easily and we were plagued by a whole set of additional bugs when testing actual jobs run on the XMPie server. As we were resolving browser display issues, we were then finding that the proofs coming back from the printer weren’t always matching. Fonts behaved oddly, colors were reverted and text would shift or not scale properly. In some browsers, certain fonts just didn’t appear. IE gave us fits when manipulating large print resolution images. And on it went for many months with the three teams – the template designers, XMPie backend developers, and the front end team all working closely together.

Somehow, in the end, we were able to make it all work and Party City is now able to offer new customizable print products and is rapidly taking more and more of its web to print production in-house. While I pray I never have to work with uEdit or another XLIM file ever again, I do remember this project fondly for it’s challenging bugs and the opportunity to work with a talented team of developers.

![[Redacted] Banking app](https://www.natwales.com/wp-content/uploads/2018/12/rn.gif)

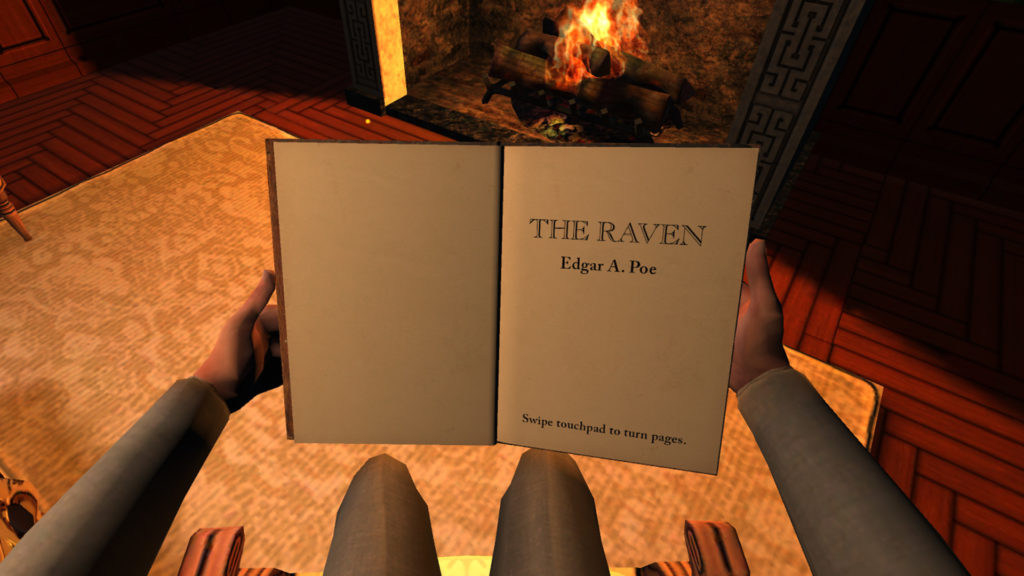

is currently available as a free download on the Gear VR store in the concepts category. As of Dec 2017 it has over 41K installs.

is currently available as a free download on the Gear VR store in the concepts category. As of Dec 2017 it has over 41K installs.